WHAT?

Cowbird claims in its “about” section that it is a multimedia storytelling tool.

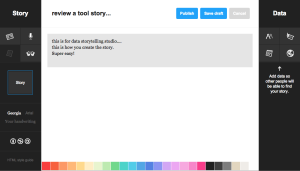

It is a free tool and gathers a library of human experience under a simple set of storytelling tools. What they mean by tool is a combination of pictures, text, and sound to create a beautiful record of your human experience.

The idea originated from Jonathan Harris, an artist and a computer scientist. I have known the artist from this great project called The Whale Hunt.

It started in his former project called Today, as a ritual of taking a picture a day, writing a short story and posting it online before bed. From that initial project, it took Harris and a small team 2 years to build up what now has become a real library of human stories. From a personal project Cowbird has become a small company.

WHAT FOR?

So, Cowbird is a platform where you can go to tell a story that you think is worth sharing with a wider community of lovers of good stories. Good but also deep and at personal level.

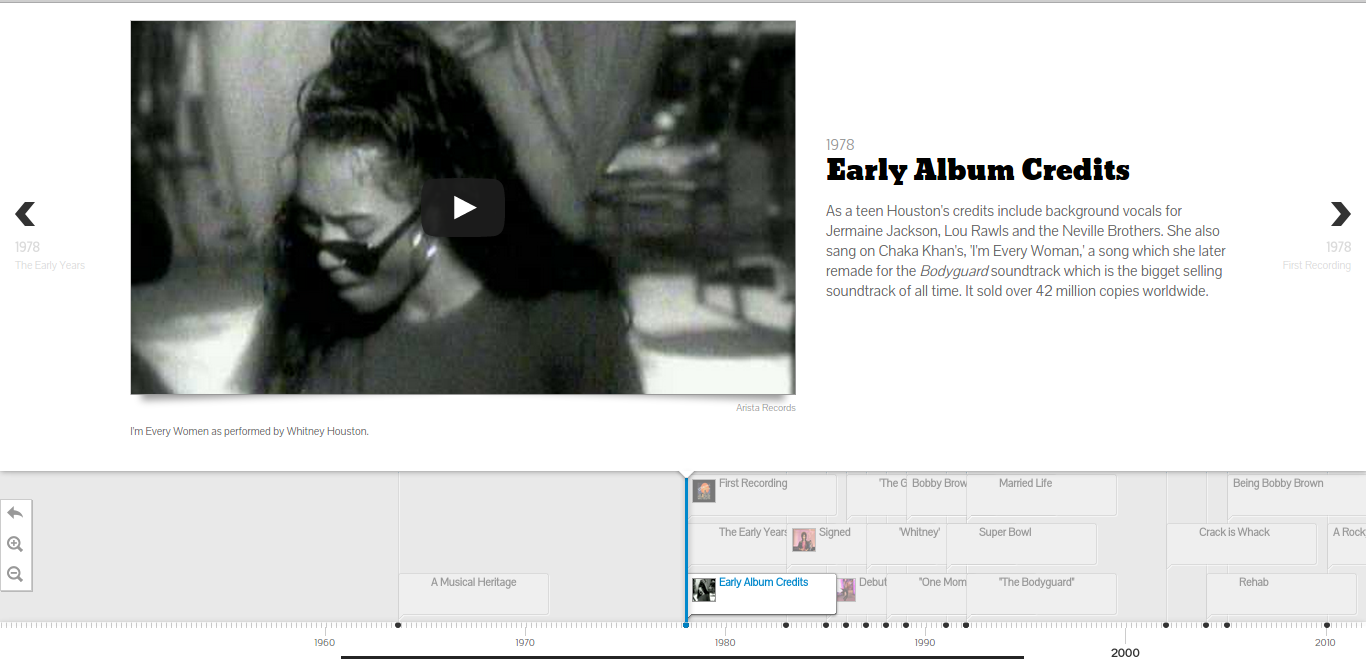

The idea is for people to tell short location-tagged stories based on their own experience using text, photos and sound or a mix thereof.

The more personal and authentic your story, the more it will resonate with the still relatively small Cowbird community. They claim on the website as of today March 30th 2015: 43,654 authors from 183 countries have told 79,493 stories on 27,721 topics.

Harris claims to Cowbird have 3 objectives

+ Create a space for a deeper, longer-lasting kind of self-expression than you’re likely to find anywhere else on the Web.

+ Pioneer a new form of participatory journalism, grounded in the simple human stories behind major news events.

+ Build a public library of human experience — a kind of Wikipedia for life experience.

HOW TO GET STARTED?

Well there are, I think, two types of users who can experience Cowbird.

You will have the active participant or the passive one. Being a witness for life as the tagline says. The active participant will be the one who created an account and share some information about himself/herself and start to share the kind of stories they want to tell or curate. The active user will bethe one documenting with his personal stories.

As for the passive participant, he is the one who will browse and discover, experience the perspectives through the different possible paths: search, stories, seeds, topics, places, dates, etc.

HOW EASY?

Cowbird provides « a warm and welcoming environment » for storytelling, which is home to a global community of storytellers.

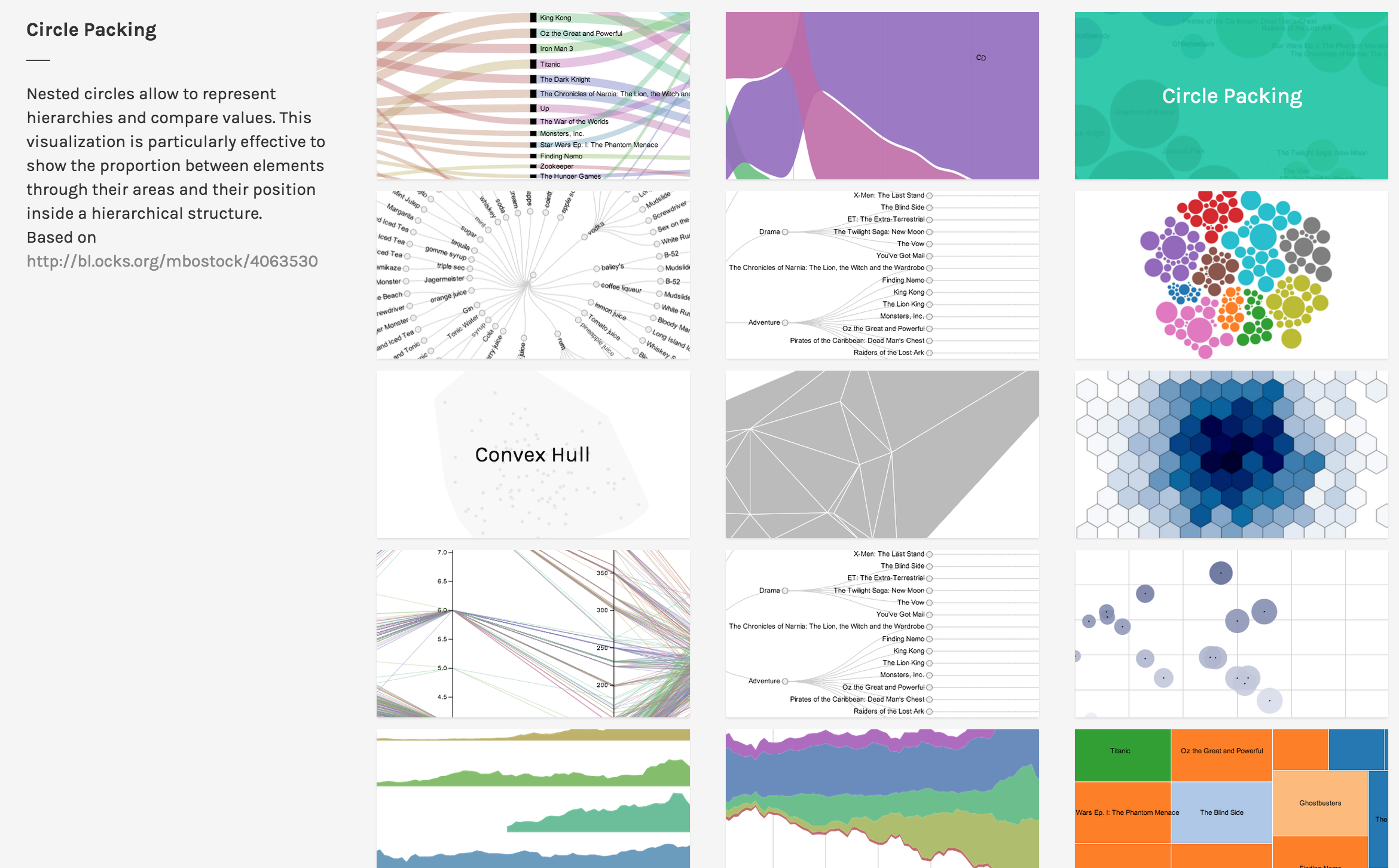

No particular skill needed to use it. I just created an account and confirmed my email. The website is beautifully designed and provides its community of users with typography, infographics and the possibility to upload photos.

The difficult part for an ‘active’ user would be the approach and the commitment. What approach to have? Finding some kind of balance between the personal and journalist challenge. It is a editorial question. How do you curate your story?

Cowbird takes time and invite people to reflect on themselves. Cowbird is “more of you than dashing off tweets from a cell phone, but we think it gives back a lot more, too” said Harris.

WOULD I RECOMMEND IT?

Of course. As a passive participants first. Browse and discover the stories, is an easy and simple way to get inspiration.

WOULD I USE IT?

Yes, if I like to tell stories and share them in another platform than a blog for instance.

USAGE

I think for journalists to be, it is a great platform to help improve and connect with an authentic audience. Through the most viewed, or loved sections you can see your story becoming popular.

Personally, hearing the stories with audio with the photo is more compelling and helps to better understand the translation of the experience.

I am not sure if I would use Cowbird in my final project, but definitely to get stories and inspiration as I would consider Cowbird more like a library.